Recent advances in semiconductors and large language models (LLMs) are enhancing the accessibility, integration, and distribution of sophisticated AI systems. Early proof-of-concepts provide glimpses into generative AI’s potential, signalling a new paradigm where consumer experiences and enterprise applications are inherently intelligent and interactive. This shift is poised to unlock new markets and spur an economic boom worth trillions of dollars.

Successful technological shifts often hinge on the convergence of compute power, data infrastructure, and user interfaces. In this piece, we highlight four companies prominent in our AI and tech ETFs that excel in these and other areas critical to the successful proliferation of AI.

Key Takeaways

- Nvidia: Preeminent chipmaker reshaping computing and powering AI’s future with its leading class of GPUs.

- Broadcom: Second largest AI chip supplier and networking leader poised to greatly benefit in ongoing AI capex cycle.

- Amazon: Tech giant starting to make its mark across the entire AI value chain.

- Intel: Processor designer and manufacturer a potential beneficiary of the AI inference and personal computer (PC) upgrade cycle.

Nvidia: Powering the Generative AI Supercycle with Top-of-the-Line GPUs and Software Stack

Nvidia’s long-term focus on graphics processing unit (GPU) chips, as opposed to the prevailing general-purpose central processing unit (CPU), has solidified its unique market position and competitive edge versus its peers. Its invention of the GPU in 1999 catalysed the expansion of the PC gaming industry, revolutionised computer graphics, and most recently helped ignite the modern AI era.

A mass disruption is unfolding in computing as AI gets embedded across everyday technology-based products and services. In the coming years, trillions of dollars’ worth of chips will be up for replacement, in datacentres and at the device level, to accommodate the adoption of Generative AI. We estimate the market for datacentre AI chips approaches US$200 billion annually by 2030, up from US$17 billion in 2022.1,2 This demand bodes well for Nvidia, which currently boasts a roughly 80% market share in datacentre acceleration, as GPUs are highly favoured for AI tasks due to their unparalleled efficiency in handling complex datasets and calculations.3 Nvidia can benefit from a virtuous cycle where increased AI usage increases demand for its products, boosting the company’s revenue and further strengthening its market leadership and research & development (R&D) capabilities.

Past performance is not a reliable indicator of future performance.

Highlighting its ongoing innovation, Nvidia recently unveiled its new Blackwell GPU architecture and Blackwell (B200) GPU chips, its most powerful AI processor to date for both AI training and inferencing.4 Nvidia also introduced a new end-to-end platform called the GB200 Grace Blackwell Superchip, which uses the B200 chips and boasts inference capabilities nearly 30 times greater and training capabilities nearly 4 times greater than those of Nvidia’s already highly coveted H100 chips, which is what ChatGPT and other GPT language models were trained on.5 The Blackwell architecture will allow organisations to build and run real-time generative AI on trillion-parameter LLMs at up to 25-times less cost and energy consumption than its predecessor, the Hopper platform.6

Nvidia's leading position is reinforced by its Compute Unified Device Architecture (CUDA), a parallel computing platform and application programming interface (API) that enables AI developers to efficiently deploy workloads across GPU clusters. Developers use CUDA to optimise their models specifically for Nvidia chips. This synergy between high-quality hardware and comprehensive software solutions provides customers with a turn-key product for rapid training and deployment of complex neural networks. Nvidia is in the process of enhancing CUDA so that it can be a complete AI software ecosystem tailored for enterprise needs. This ecosystem includes the development of new microservices under the Cuda-X initiative, which simplifies the AI workflow by facilitating model fine-tuning, data processing, and retrieval.7 Additionally, the NIM inference framework offers APIs for scalable deployment of these models. This feature is crucial for external technologies to leverage Nvidia infrastructure, particularly in sectors like Healthcare and Financials.8

Broadcom: Diverse Semiconductor Player Well-Positioned for the Next AI Capex Cycle

Broadcom plays a pivotal role in the AI space through its extensive range of integrated hardware solutions which include chips for networking, broadband, and storage. As AI applications require increasingly complex and swift data transmission, Broadcom's solutions ensure that infrastructure keeps pace to enhance global network efficiency. In 2023, Broadcom reported a record revenue of US$35.8 billion, up 8% year-over-year (YoY), driven by investments in accelerators and network connectivity for AI by hyperscalers.9

General purpose GPUs from the likes of Nvidia have dominated AI capex in datacentres thus far, but we believe that Broadcom is poised to capture a significant portion of the next AI capex wave, particularly in networking (Ethernet and InfiniBand) and with its application-specific integrated circuits (ASICs). Moreover, networking infrastructure is becoming a bottleneck as datacentres attempt to scale out AI servers, which suggests a need for increased investment. Global networking spend is forecast to grow at a 26% compounded annual growth (CAGR) through 2027, surpassing the 16% growth of computer processors.10 These dynamics and Broadcom’s diverse portfolio of networking products catering to next-gen AI server clusters has the company targeting US$10 billion in AI revenue for fiscal year (FY) 2024, up from US$4.2 billion in FY 2023.11 That level of revenue would rank Broadcom as the second largest AI chip supplier in the world.12 It also aims to increase total revenue by 40% YoY to US$50 billion.13

Broadcom also distinguishes itself from peers by blending semiconductor solutions with software capabilities. Following its VMware merger, Broadcom can seamlessly integrate server storage and networking hardware with a comprehensive infrastructure suite for on-premises and cloud deployments. Substantial software revenue, thanks to VMware, can support margin and free-cash-flow growth, potentially providing investors with a stable, profitable semiconductor investment amidst sector and macro uncertainties. Broadcom estimates that by FY 2026, the VMware merger could increase revenues by 50% and contribute an additional US$5 billion in annual free cash flow.14 Additionally, Broadcom’s thriving device component business also offers the company exposure to a potential AI-induced device upgrade cycle in the coming years.

Forecasts are not guaranteed, and undue reliance should not be placed on them.

Amazon: A Growing AI Giant Addressing the AI Value Chain From All Angles

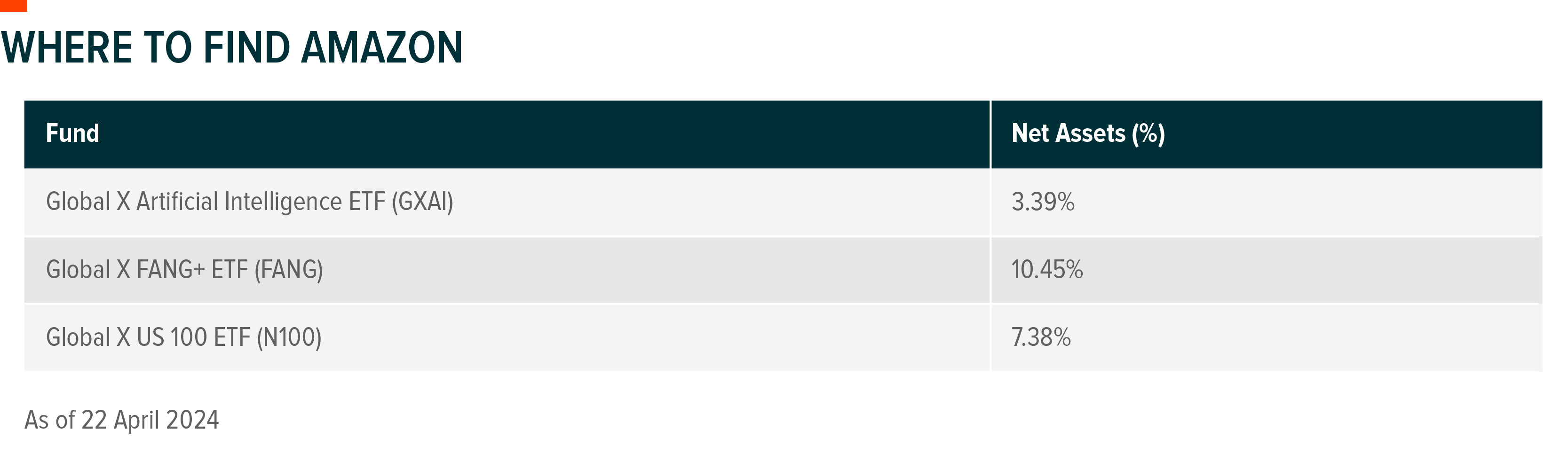

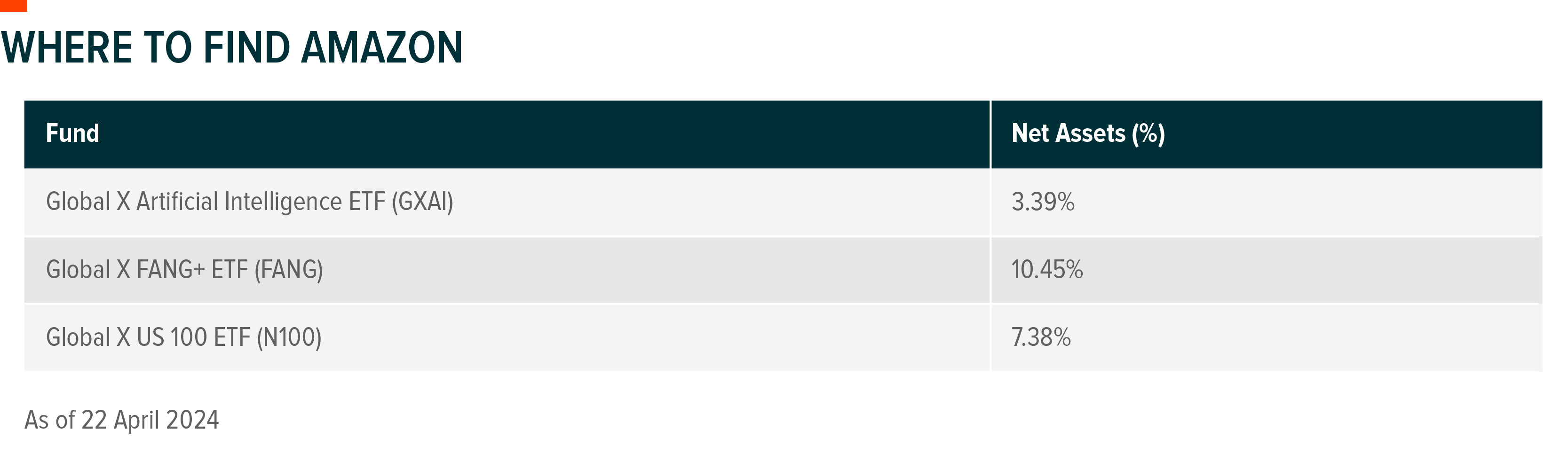

Amazon Web Services (AWS), the largest global cloud provider and the largest profit driver for Amazon, reported incremental quarterly revenue growth throughout 2023, including 12% YoY sales growth in Q4 2023, its most recent reported quarter.15 Generative AI tools like Bedrock in the middle layer of the enterprise tech stack and the Amazon Q coding assistant in the top layer were growth drivers, suggesting Amazon’s early foray into generative AI has it well positioned.

Launched in April 2023, the Bedrock platform enables businesses to build generative AI-powered apps via pre-trained models from third-party startups, including Stability AI, AI21 Labs, and Anthropic, as well as AWS’ in-house Titan foundation models.16 Bedrock provides AWS with a distinct offering tailored exclusively for corporate clients.

Past performance is not a reliable indicator of future performance.

AWS can continue to enhance its status as a preferred cloud provider by collaborating with and integrating leading models, which also helps advance AWS’ AI hardware initiatives. A notable recent example is AWS’ US$2.75 billion follow on investment in Anthropic, an AI startup renowned for its foundational model and Claude chatbot.17 Through this partnership, Anthropic committed to using AWS for cloud services and Amazon’s chips to train and deploy its AI models, joining other prominent clients like Airbnb and Snap.18

This type of partnership is significant as AWS enhances its AI chip capabilities at the bottom of the enterprise stack to compete with the likes of Nvidia and AMD. AWS’ latest Trainium 2 chips offer quadruple the training speed and triple the memory capacity of its Trainium 1 chips.19 AI solutions like these combined with Amazon’s reach can go a long way towards reshaping the AI value chain as vertical integration with semiconductors can create long-term price advantages.

Lastly, Amazon’s use of generative AI in its consumer facing franchises is also growing, including in Shopping and Advertising verticals. Earlier this year, Amazon launched Rufus Shopping Assistant AI, a generative AI-powered expert shopping assistant trained on Amazon’s product catalogue, reviews, and other information, designed to answer customer questions.20 Similarly, generative AI tools embedded into Amazon’s Ad Console has been helping advertisers improve product images.21 We believe the wider integration of these features is likely to help spur growth throughout Amazon’s non-cloud franchises.

Intel: AI Opportunity Will Present Further Down The Adoption Curve

Intel has long been synonymous with personal computer (PC) microprocessors, but its venture into AI includes significant strides in both hardware and software. Recent innovations designed to accelerate AI workloads on cloud and edge devices enhance Intel’s product suite. Key to its continued growth in AI is the company’s new Gaudi AI chip, which is twice as power-efficient and is tested to run AI models 1.5 times faster than Nvidia's H100 GPU.22

Intel's unique position as both a processor designer and a manufacturer with its own chip factories sets it apart in the industry and helps it benefit from U.S. initiatives aimed at revitalising domestic chip manufacturing.23 The forthcoming construction of new Intel plants, afforded by recent grants from the U.S. CHIPS Act, will enable Intel to produce AI chips for its own use and manufacture AI chips for other top fabless semiconductor firms, including Nvidia and AMD.24 These capabilities position Intel to potentially increase its market share in the AI chip sector significantly in the coming years, leveraging its dual role to cater to a broader spectrum of the market's needs.

Over time, the scope of AI chips will also expand well beyond datacentres, onto electric vehicles (EVs), smartphones, laptops, robotics, medical devices, and more. In parallel, AI training needs are likely to face multifaceted evolution, with niche AI applications requiring simpler, nimbler, and agile model training processes. This dynamic will drive the need for diverse training and inferencing chips, which is likely to benefit a vast array of semiconductor firms, much like previous technology paradigm shifts. Intel, we believe, is well positioned for this ultimate demand as well as for a device upgrade cycle given its unique dominant datacentre CPU and PC chip positioning. Intel’s close strategic relationships, such as with autonomous driving company Mobileye, will also give the company unique advantages in key verticals like EVs.

Conclusion: Full Speed Ahead for Transition from Experimental AI to Commercialised AI

The consensus among tech leaders and investors seems clear: investing in AI is essential for long term product and financial success. As an example of this sentiment, in 2023, global private investment activity in AI more than doubled YoY while deal activity slumped across all sectors.25 The increase in capital investments coming into the AI space is set to further influence a wide array of semiconductor firms across the AI value chain. Currently benefitting a handful of companies in AI hardware and data & infrastructure, we expect participation to increase as the AI opportunity expands into new platforms in verticals. In our view, 2024 marks a significant year as generative AI moves from R&D to real-world applications and revenue generation. This shift is likely to present investors with increasingly diverse opportunities to gain exposure to AI.